Introduction

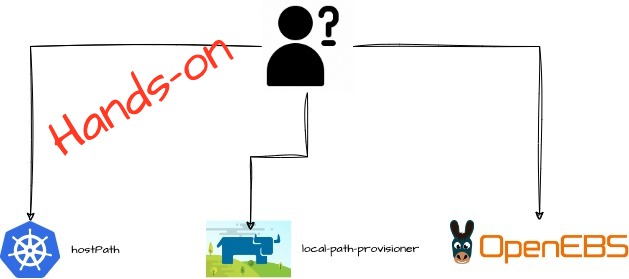

Let’s continue from where we left off in the previous blog about using local storage on Kubernetes worker nodes. This time, we will dive into the hands-on part, where we explore how to install, configure, and work with the three local storage options:

I will walk you through the setup, and then we will see how they behave in action with an example. By the end of this post, you will be able to set up these solutions and get local storage up and running in Kubernetes cluster.

1. HostPath Configuration

HostPath is a simple and manual storage option. Here is how to use it in your Kubernetes cluster:

Steps

- Create a Pod specification that uses HostPath

apiVersion: v1

kind: Pod

metadata:

name: hostpath-pod

spec:

containers:

- name: hostpath-container

image: nginx

volumeMounts:

- mountPath: /usr/share/nginx/html

name: hostpath-volume

volumes:

- name: hostpath-volume

hostPath:

path: /data/hostpath

type: DirectoryIn this configuration, /data/hostpath on the node is mounted into the pod at /usr/share/nginx/html. The hostPath field specifies the directory from the node to use.

- Apply the YAML file

kubectl apply -f hostpath-pod.yamlNOTE: Make sure the /data/hostpath directory exists on the node. HostPath is straightforward, but it doesn’t support dynamic provisioning, so you will need to handle everything manually.

2. Rancher local-path-provisioner

Rancher local-path-provisioner can be installed with Helm. It allows you to dynamically provision storage by creating PVCs that map to predefined directories on the host.

Steps

- Install local-path-provisioner:

git clone https://github.com/rancher/local-path-provisioner.git

cd local-path-provisioner

helm install --name local-path-storage --namespace test-ns ./deploy/chart/local-path-provisioner --create-namespaceNote: Ensure that /opt/local-path-provisioner is pre-created on all nodes.

- Verify the installation:

helm ls -n test-ns | grep local-path-provisioner

kubectl get deploy -n test-ns | grep local-path-provisioner

kubectl get sc local-path- Creating test PVC and Pod:

# test_pvc_pod.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-pvc123

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

storageClassName: local-path

---

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

spec:

containers:

- name: nginx-container

image: nginx:latest

volumeMounts:

- name: nginx-persistent-storage

mountPath: /usr/share/nginx/html

volumes:

- name: nginx-persistent-storage

persistentVolumeClaim:

claimName: nginx-pvc123- Verify PVC and Pod:

kubectl get pvc

kubectl get pod -o wide

- Test by creating a file inside the container

kubectl exec -it nginx-pod -- bash

echo "this is a test file" > /usr/share/nginx/html/test.txt

cat /usr/share/nginx/html/test.txt- Check on the worker node where the pod is scheduled:

ssh ubuntu@node-ip

ls /opt/local-path-provisioner/<pvc-directory>/test.txtStorage Limitation

Despite setting the PVC size to 5GB, the pod uses the host filesystem space, so you can create files larger than the requested volume. For example:

kubectl exec -it nginx-pod -- bash

dd if=/dev/zero of=/usr/share/nginx/html/test.dat bs=1G count=10This results in a 10GB file being created, even though the PVC was only 5GB.

Output from my lab

# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

nginx-pvc123 Bound pvc-884f3c00-88ab-4b62-8fb5-76a41b59afee 5Gi RWO local-path <unset> 10s

# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-pod 1/1 Running 0 43s 10.20.157.127 node02.pbandark.com <none> <none>

# kubectl exec -it nginx-pod -- bash

root@nginx-pod:/# cd /usr/share/nginx/html

root@nginx-pod:/usr/share/nginx/html# dd if=/dev/zero of=test.dat bs=1G count=10

10+0 records in

10+0 records out

10737418240 bytes (11 GB, 10 GiB) copied, 26.509 s, 405 MB/s. ## <=====3. OpenEBS

OpenEBS offers more advanced local storage options, including hostPath, LVM-backed volumes and HA volumes.

Steps

- Install OpenEBS:

helm repo add openebs https://openebs.github.io/openebs

helm install openebs --namespace openebs openebs/openebs -f values_4.1.1.yaml --create-namespace- Verify the installation:

kubectl get deploy -n openebs

kubectl get scTypes of Local Storage with OpenEBS

i. Local PV HostPath

The behavior here is similar to the Rancher local-path-provisioner. i.e. local directory from the Kubernetes nodes will be used to store the data.

ii. Local PV LVM

To use LVM with OpenEBS, first, create a dummy device to use as a Volume Group(VG):

truncate -s 1024G /tmp/disk.img

sudo losetup -f /tmp/disk.img --show

sudo pvcreate /dev/loop0

sudo vgcreate lvmvg /dev/loop0- Create a StorageClass for LVM:

# sc.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: openebs-lvmpv

allowVolumeExpansion: true

volumeBindingMode: WaitForFirstConsumer

reclaimPolicy: Delete

parameters:

storage: "lvm"

volgroup: "lvmvg"

provisioner: local.csi.openebs.io- Create test PVC and Pod:

# pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: csi-lvmpv

spec:

storageClassName: openebs-lvmpv

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 15Gi

# test_pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: test-pod

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: nginx-storage

mountPath: /var/log/nginx

volumes:

- name: nginx-storage

persistentVolumeClaim:

claimName: csi-lvmpv- Verify the LVM creation on the worker node where the pod is scheduled:

vgs

lvs- The PVC limits the storage available in the pod to 15GB, as seen from the following:

kubectl exec -it test-pod -- df -h /var/log/nginxOutput from my lab:

root@node01:/mnt/openebs/lvm# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

csi-lvmpv Bound pvc-dd80d027-a59f-4645-a03c-1f49628fe9bc 15Gi RWO openebs-lvmpv 10s

# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-pod 1/1 Running 0 29s 10.52.34.34 node03-pbandark.com <none> <none>

# ssh node03-pbandark.com

root@node03:~# vgs

VG #PV #LV #SN Attr VSize VFree

lvmvg 1 1 0 wz--n- <1024.00g <1009.00g

root@node03:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

pvc-dd80d027-a59f-4645-a03c-1f49628fe9bc lvmvg -wi-ao---- 15.00g

## The PVC I have created is of 15GB. From the pod, I will not be able to consume the storage beyond 15GB

# kubectl exec -it test-pod -- bash

root@test-pod:/# df -h /var/log/nginx

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/lvmvg-pvc--dd80d027--a59f--4645--a03c--1f49628fe9bc 15G 2.1M 15G 1% /var/log/nginx

root@test-pod:/var/log/nginx# dd if=/dev/zero of=test.dat bs=1G count=20

dd: error writing 'test.dat': No space left on device ## <====

15+0 records in

14+0 records out

15725346816 bytes (16 GB, 15 GiB) copied, 33.9467 s, 463 MB/siii. Replicated PV – Mayastor

Mayastor, part of OpenEBS, allows for replication of persistent volumes(PVs), ensuring high availability of your data.

- Make sure you go through the prerequisites section.

- Create a dummy device(on each node) to use as disks on the nodes for OpenEBS DiskPool resource:

truncate -s 500G /tmp/disk2.img

sudo losetup -f /tmp/disk2.img --show- Create DiskPool resources

Output from my lab:

# cat disk_pool_node01.yaml

apiVersion: "openebs.io/v1beta2"

kind: DiskPool

metadata:

name: pool-on-node01

namespace: openebs

spec:

node: node01.pbandark.com

disks: ["/dev/loop10"]

# cat disk_pool_node02.yaml

apiVersion: "openebs.io/v1beta2"

kind: DiskPool

metadata:

name: pool-on-node02

namespace: openebs

spec:

node: node02.pbandark.com

disks: ["/dev/loop7"]

# cat disk_pool_node03.yaml

apiVersion: "openebs.io/v1beta2"

kind: DiskPool

metadata:

name: pool-on-node03

namespace: openebs

spec:

node: node03-pbandark.com

disks: ["/dev/loop6"]

# kubectl get diskpool -A

NAMESPACE NAME NODE STATE POOL_STATUS CAPACITY USED AVAILABLE

openebs pool-on-node01 node01.pbandark.com Created Online 536342429696 16106127360 520236302336

openebs pool-on-node02 node02.pbandark.com Created Online 536342429696 16106127360 520236302336

openebs pool-on-node03 node03-pbandark.com Created Online 536342429696 16106127360 520236302336- Create a StorageClass for Replicated Mayastor PV:

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: mayastor-replicated-3

parameters:

ioTimeout: "30"

protocol: nvmf

repl: "3"

provisioner: io.openebs.csi-mayastor

volumeBindingMode: Immediate- Create test PVC and Pod:

# test_pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: csi-replicated-pvc

spec:

storageClassName: mayastor-replicated-3

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 15Gi

# test_pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: test-pod

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: nginx-storage

mountPath: /var/log/nginx

volumes:

- name: nginx-storage

persistentVolumeClaim:

claimName: csi-replicated-pvcThis will provision a 5GB replicated volume across three nodes, ensuring high availability. The data will remain available even if one of the nodes fails.

Conclusion

With this guide, you have learned how to install and configure three different local storage options for Kubernetes: HostPath, Rancher local-path-provisioner, and OpenEBS. Each of these options has its own strengths and use cases, making them suitable for different deployment needs. If you have any query, please add a comment or ping me on LinkedIn.